Mice Detection in Go Using OpenCV and MachineBox

Basil sourced from disneyclips and gopher sourced from gopherize.me.

Background

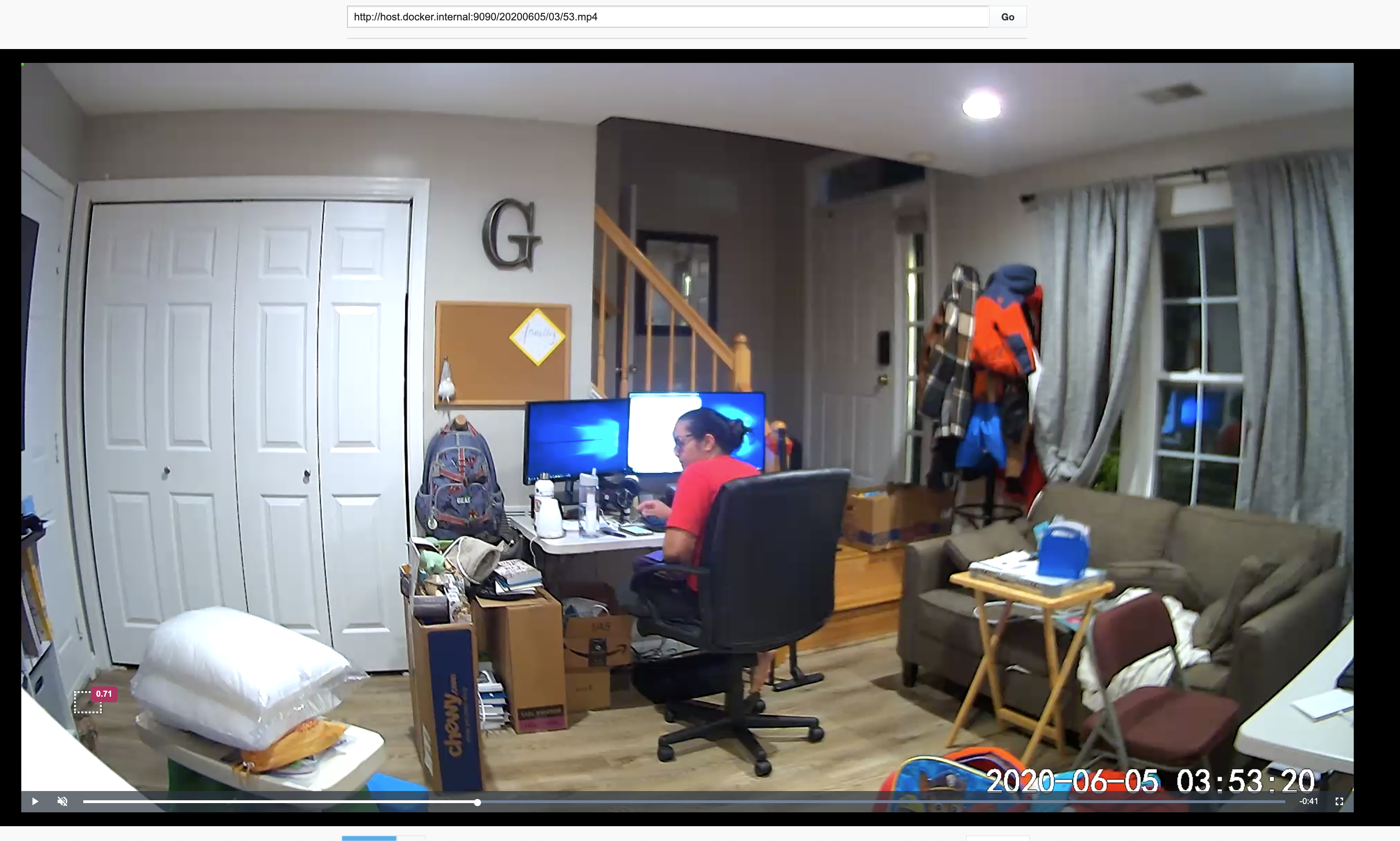

Last week, we had an unwanted visitor. My wife was working late in our COVID-19 office space when she heard something rustling. She looked over and...

Did you catch that? Our little mouse visitor quickly scurried to our closet in the lower left corner of the video. Of course, my wife is horrified and would like to throw the whole house away. How long has he been in the house? How many do we have? Why, oh why?! With all of the things going on in addition to the pandemic, like the mistaken shooting of Breonna Taylor, the attempted cover up of the killing of Ahmaud Arbery, and the horrible execution of George Floyd, this unwanted visitor was a nice reprieve.

We are fortunate to have a camera (a Wyze Cam Pan) set up in the basement already and it just happened to catch the path the mouse favored. However, the Wyze Cam saves videos in one-minute intervals so going through nearly 7-thousand videos looking for a mouse to scurry by in a fraction of a second would be a major time sync. Seems like a perfect problem for a software engineer.

Training the model

Being a systems engineer, I haven't had the need for any machine learning in my day-to-day (yet), but I figured I would need to train a model to detect the mouse in the videos I had. I've been using Go for most of my side projects lately, so I unwittingly just searched for:

golang process video detect

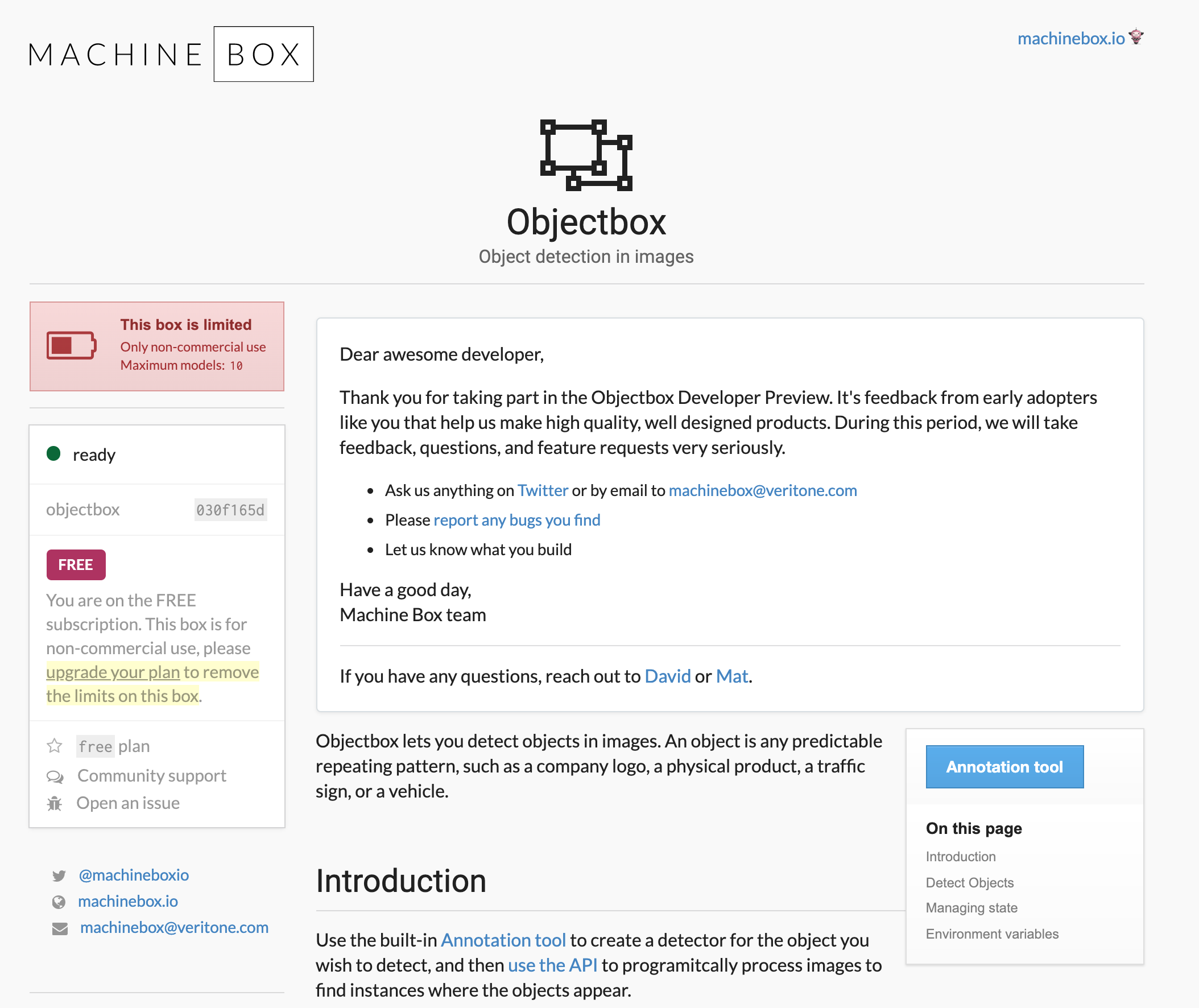

In the results I found some references for MachineBox. Looking through the docs it seemed like their Objectbox was what I needed to train a model to detect the unwanted rodent.

After setting up a free account with MachineBox, getting the model trained was really straight forward. I used

docker-compose to set up the box in a container and exported my MB_KEY in my shell environment. After a

docker-compose up the container was running and I was able to access the box at http://localhost:8083 and view

the Objectbox documentation.

1version: '3'

2services:

3 objectbox1:

4 image: machinebox/objectbox

5 environment:

6 - MB_KEY=${MB_KEY}

7 - MB_OBJECTBOX_ANNOTATION_TOOL=true

8 ports:

9 - "8083:8080"

10 volumes:

11 - objectboxdata:/boxdata

12volumes:

13 objectboxdata:

Objectbox landing page

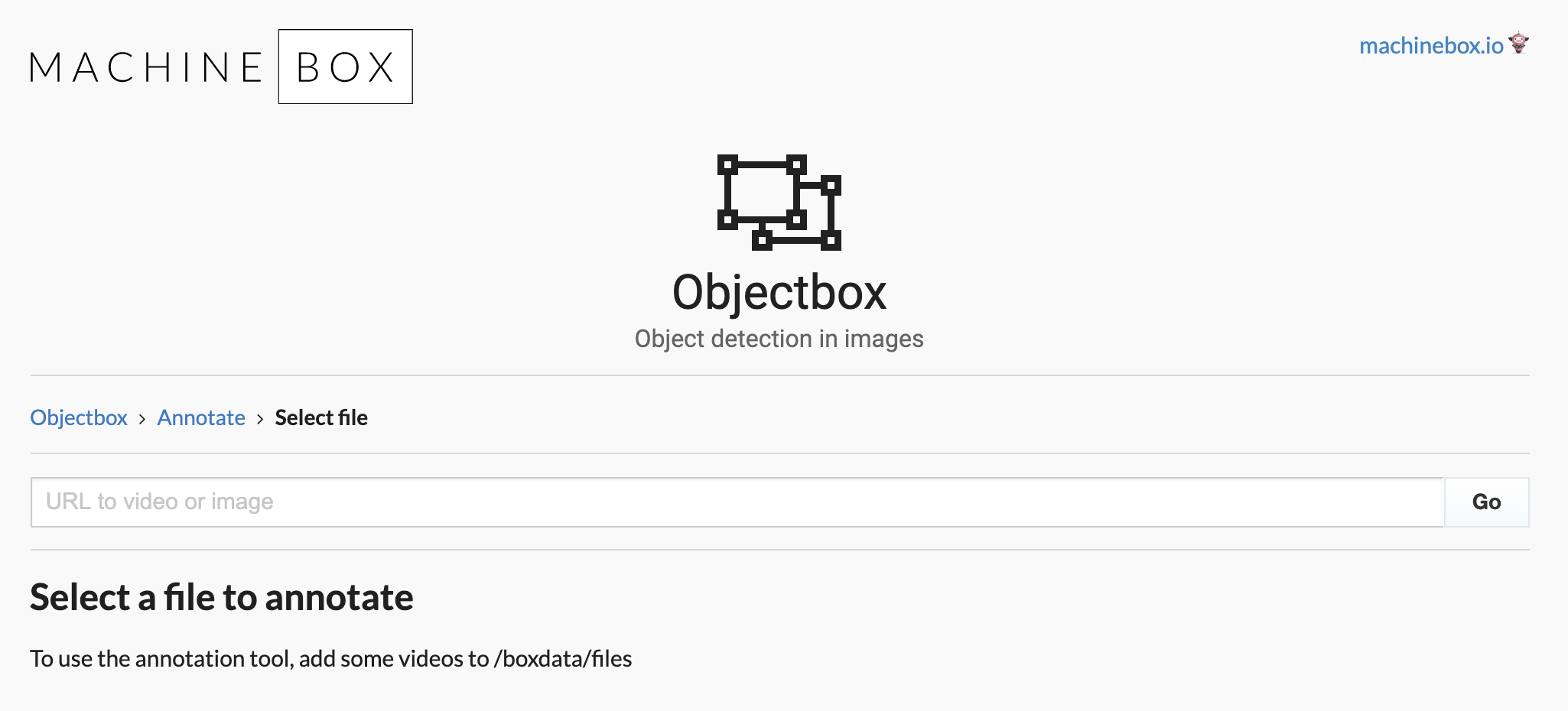

Training the model was relatively straight forward using the annotation tool. Since I knew the time my wife actually saw

the mouse, I found the one-minute long video file with her screech. Since it seemed from the UI that the annotation tool

would only accept a URL to the video or image, I wrote a little file server

so that I could provide a local URL instead of hosting the video somewhere. However, according to their blog you should be able to mount a folder with the training videos to the container, but I couldn't find it in their docs 😞

.

I assume the videos would be available in the /boxdata mount point.

Objectbox annotation tool

I won't go into too much detail on how to annotate the video. Their demo video does a decent job describing what you want to do. I found the frames with the mouse, annotated them, and clicked "Start training" and let it do its thing.

Annotation in action

Processing the video

Now for the fun part. At a high level, we have a channel for video frames and a channel for results. The extractor

reads in the video file and places each frame in the frames channel. In concurrent goroutines, the checkers send the

frames to the objectbox container. If the model detects a mouse, then we save that frame to a file.

representation of the structure of the program.

Frame extraction

We'll be using GoCV to extract frames from the video. In a goroutine the video is streamed and each frame is encoded and placed in a channel for processing.

1func extractFrames(done <-chan struct{}, filename string) (<-chan frame, <-chan error) {

2 framec := make(chan frame)

3 errc := make(chan error, 1)

4

5 go func() {

6 defer close(framec)

7

8 video, err := gocv.VideoCaptureFile(filename)

9 if err != nil {

10 errc <- err

11 return

12 }

13 frameMat := gocv.NewMat()

14

15 errc <- func() error {

16 n := 1

17 for {

18 if !video.Read(&frameMat) {

19 return &endOfFile{n}

20 }

21 buf, err := gocv.IMEncode(gocv.JPEGFileExt, frameMat)

22 if err != nil {

23 return err

24 }

25 select {

26 case framec <- frame{n, buf}:

27 case <-done:

28 return errors.New("Frame extraction canceled")

29 }

30 n++

31 }

32 }()

33 }()

34 return framec, errc

35}

Frame checker

In concurrent goroutines, frames are pulled from the frames channel and sent to the Objectbox container. If the model detects a mouse in the frame, then we construct a result and place it in the channel.

1// channel of frames with mice

2results := make(chan result)

3var wg sync.WaitGroup

4wg.Add(concurrencyF)

5

6// Process the frames by fanning out to `concurrency` workers.

7log.Println("Start processing frames")

8for i := 0; i < concurrencyF; i++ {

9 go func() {

10 checker(done, frames, results)

11 wg.Done()

12 }()

13}

14

15// when each all workers are done, close the results channel

16go func() {

17 wg.Wait()

18 close(results)

19}()

1type result struct {

2 // the frame number

3 frame int

4 // The detected bounds

5 detectors []objectbox.CheckDetectorResponse

6 file io.Reader

7 err error

8}

9

10func checker(done <-chan struct{}, frames <-chan frame, results chan<- result) {

11 objectClient := objectbox.New("http://localhost:8083")

12 info, err := objectClient.Info()

13 if err != nil {

14 log.Fatalf("could not get box info: %v", err)

15 }

16 log.Printf("Connected to box: %s %s %s %d", info.Build, info.Name, info.Status, info.Version)

17

18 // process each frame from in channel

19 for f := range frames {

20 if f.number == 1 || f.number%10 == 0 {

21 log.Printf("Processing frame %d\n", f.number)

22 }

23 // Set up a ReadWriter to hold the image sent to the model to write the file later.

24 var bufferRead bytes.Buffer

25 buffer := bytes.NewReader(f.buffer)

26

27 // Send data read by the objectbox request to the buffer.

28 tee := io.TeeReader(buffer, &bufferRead)

29 resp, err := objectClient.Check(tee)

30 detectors := make([]objectbox.CheckDetectorResponse, 0, len(resp.Detectors))

31

32 // flatten detectors and identify found tags

33 for _, t := range resp.Detectors {

34 if len(t.Objects) > 0 {

35 detectors = append(detectors, t)

36 }

37 }

38 if len(detectors) == 0 {

39 continue

40 }

41 select {

42 case results <- result{f.number, detectors, &bufferRead, err}:

43 case <-done:

44 return

45 }

46 }

47}

Results processor

Finally, we drain the results channel and encode the images with the mouse highlighted. The Objectbox response provides the coordinates of the detected object, so we can use them to draw a rectangle around the found object. I tried using the standard lib here, but it seemed as though I'd need to iterate through each pixel within a context to draw a rectangle. I found gg which is a nice little 2D rendering library.

1func processResults(results <-chan result) {

2 for r := range results {

3 if r.err != nil {

4 log.Printf("Frame result with an error: %v\n", r.err)

5 continue

6 }

7 log.Printf("Mouse detected! frame: %d, detectors: %v\n", r.frame, r.detectors)

8

9 image, err := jpeg.Decode(r.file)

10 if err != nil {

11 log.Printf("Unable to decode image: %v", err)

12 continue

13 }

14

15 imgCtx := gg.NewContextForImage(image)

16 green := color.RGBA{50, 205, 50, 255}

17 imgCtx.SetColor(color.Transparent)

18 imgCtx.SetStrokeStyle(gg.NewSolidPattern(green))

19 imgCtx.SetLineWidth(1)

20

21 for _, d := range r.detectors {

22 left := float64(d.Objects[0].Rect.Left)

23 top := float64(d.Objects[0].Rect.Top)

24 width := float64(d.Objects[0].Rect.Width)

25 height := float64(d.Objects[0].Rect.Height)

26 imgCtx.DrawRectangle(left, top, width, height)

27 imgCtx.Stroke()

28 }

29

30 cleanedFilename := strings.ReplaceAll(filenameF, "/", "-")

31 frameFile := path.Join(outputDirF, Version+"-"+cleanedFilename+"-"+strconv.Itoa(r.frame)+".jpg")

32

33 err = gg.SaveJPG(frameFile, imgCtx.Image(), 100)

34 if err != nil {

35 log.Printf("Unable to create image: %v\n", err)

36 continue

37 }

38 }

39}

Here's a gif of the frames for one of the videos!

generated with convert rendered-frames/*${filedate}* rendered-frames.gif

Wrapping up

With the program set up to process a video, I ran all 4-thousand-ish videos through it to find the first one with a mouse. Woot!

You can find the full code for this here: grocky/mouse-detective.